I wrote this post 3 years ago to show the impact technical SEO can have on your organic traffic.

The crazy thing is if they would've maintained the structure we had in place, they'd still be receiving millions of organic visits.

In this post, I'll cover:

How to leverage your site's internal link equity and your strongest page's citation flow. The details on using this to your full advantage to send link equity to the pages that need it most.

Optimizing your site's crawl budget to make sure you're not wasting Googlebot's time crawling pages with thin or near-duplicate content.

The power of properly built and optimized sitemaps, especially for large (enterprise) UGC websites.

How to take advantage of "one time events" to produce bursts in organic traffic.

Let's dive in.

Website background

The site wasn't new and had a solid amount of equity with search engines.

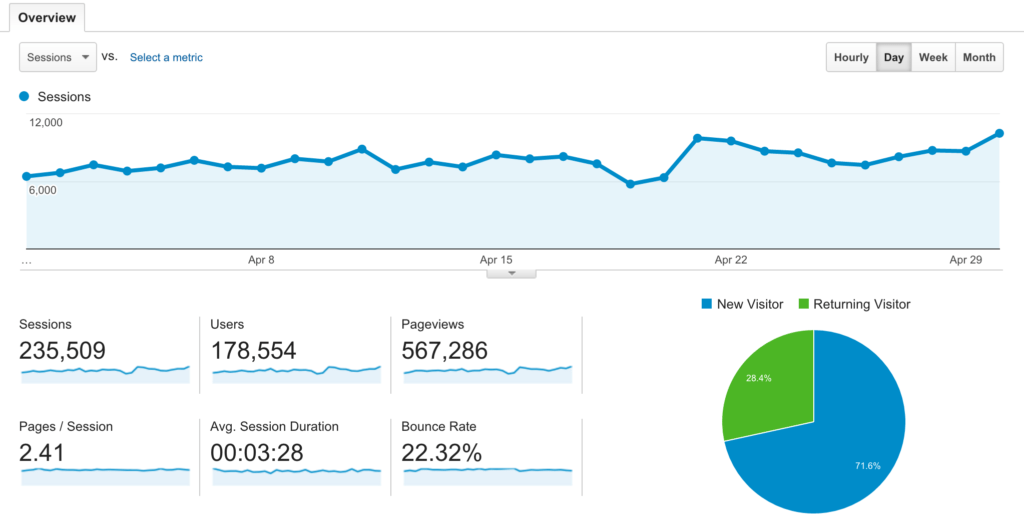

It had a decent base of organic traffic (~230k/mo), although engagement from search wasn't great.

We created NO content (yes, ZERO) - this site was run 100% user generated content (UGC).

I had no team outside of myself, the owner, and his developer.

We had no advertising spend of any kind; all traffic coming to the site was organic.

Many of these principles (if not all) can

be applied to new sites.. but in this case, these changes were used to unlock growth potential.

Key Drivers To Growth

The following 3 areas were the strategic elements that had the biggest impact on organic growth.

1. Continuous Change

To re-visit a still hugely important piece of SEO; pumpkin hacking. We paid attention to which pages were not only picking up the most steam in terms of traffic, we took some extra steps to make sure these pages got as much of

as we could give them.

To give these pages the little extra boost we could, we used one of the most powerful tools we had for search; our website.

Sounds super obvious and a bit stupid doesn't it? But seriously, perhaps the most commonly overlooked factor for driving organic growth brings me to my next point;

2. On-Site SEO

We added links with target anchors to the homepage, created new sections on top-level pages to display links for "featured content" around the site, and baked new views into site-wide navigation.

In addition there were some very specific things wrong with the way the code was built and being rendered on the pages, which I'll come back to in a bit.

Leveraging a mix of Google's Search Console, a lot of blood and sweat in development hours to get things right, and amazing tools like URL Profiler; cleaning up the site's foundation has proven invaluable.

3. One-time Events

One interesting tool that only some of the smartest digital marketers on the internet know about, and even more so,

- is

traffic leaks

. This strategy involves engineering large amounts of short-term traffic to your site, by acquiring (or leaking) that traffic from another site that already has all the traffic you want. Perhaps the most popular site for running these kinds of campaigns is Reddit. We didn't use Reddit, but we did put a lot of time into building relationships with people who held similar keys to kingdoms of huge traffic. As a music and audio hosting site, and after taking a hard look at analytics, we found that our big traffic events happened when new music was released; and more specifically, when it was released

. So how do we make sure we become the source for

? Build relationships and mindshare with the people who have access to this content.

To do this we leverage the relationships with the lowest barrier of rejection; existing power users on the platform.

You'd be amazed at how many people who use your tools are genuinely willing to help, just because you ask.

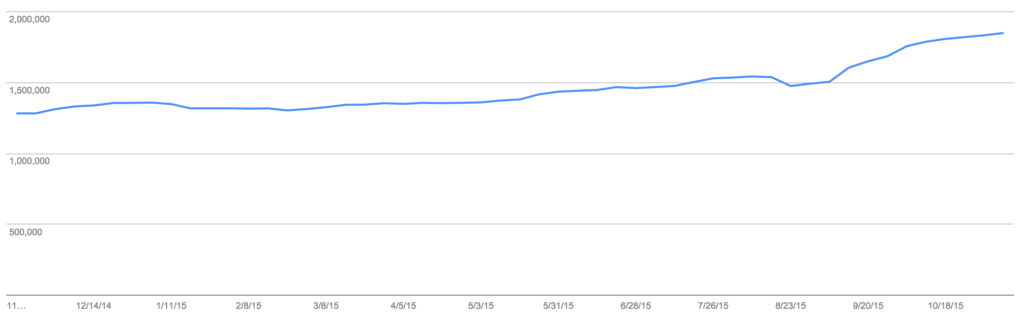

A Look At Traffic Growth

When I first joined the YourListen team:

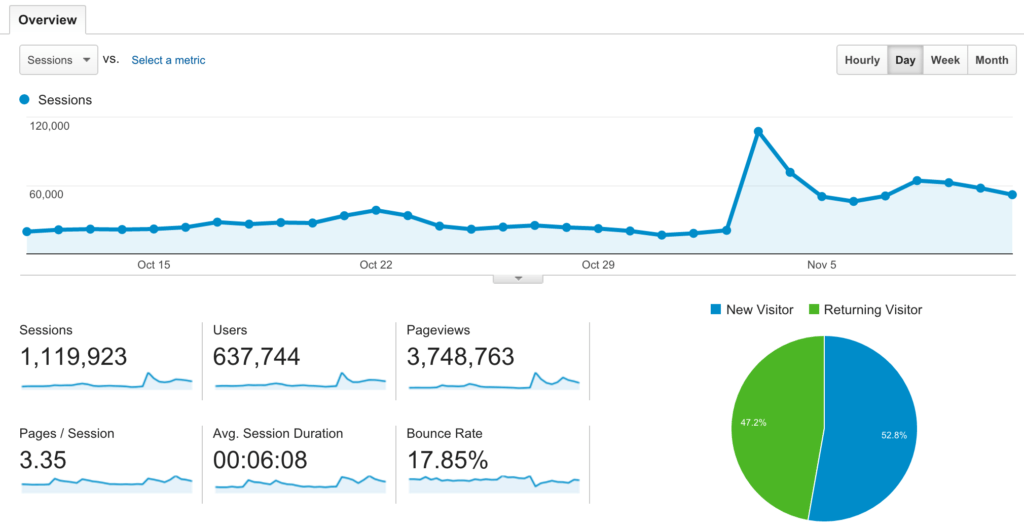

18 months later:

When I first came on the site was averaging between 7 and 8,000 visits per day. Not long after finding and implementing these changes the site shot up to an average of 25,000 visits per day.

We did finally hit that milestone (as you can see above); the site level off to a sustainable run-rate is closer to 800,000 visits per month.

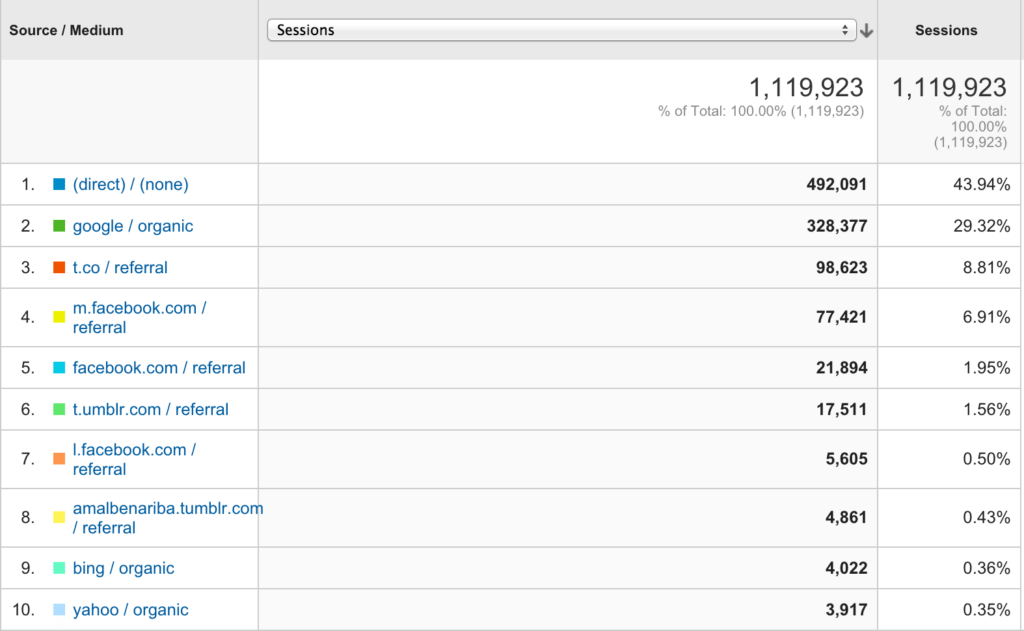

Here's how that traffic breaks down in terms of acquisition channels:

How to Leverage "One Time Events"

Coming back to this idea, cause it's critical to hitting these kinds of traffic levels - when you are able to have these big traffic events a couple times per year, it has an amazing effect on your overall residual traffic.

What we've noticed is that even after the URL's that drive these traffic spikes stop receiving huge temporal increases in traffic, the overall site traffic lifts up, and stays there.

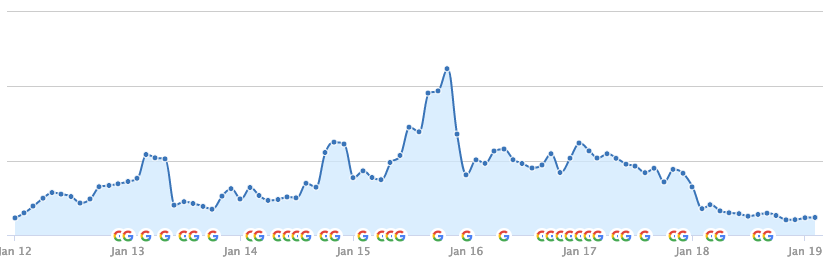

Notice in the screenshot above that 3 days after the spike, as traffic is starting to level back off; it begins to grow again, all on its own.

What's Causing This?

Those large spurts of traffic generate awareness, which leads to an increase in traffic from all channels; direct, social, and of course organic. They directly lead to increases in branded search, type-in traffic, and social referrals. All sending a lot of positive trust signals throughout the interwebs. All of these contribute to what I'll call the

of your website from a search engine perspective, not to mention the links :)

One of the leaks on YL gained so much attention it resulted in links from some big websites like Billboard, USA Today, and other major media news outlets.. not to mention, a phone call from Prince.

Back To On-Site SEO

There were some really specific things that needed to be fixed, as well as some enhancements that started to really make a difference.

Let's start with the things that we needed to clean up.

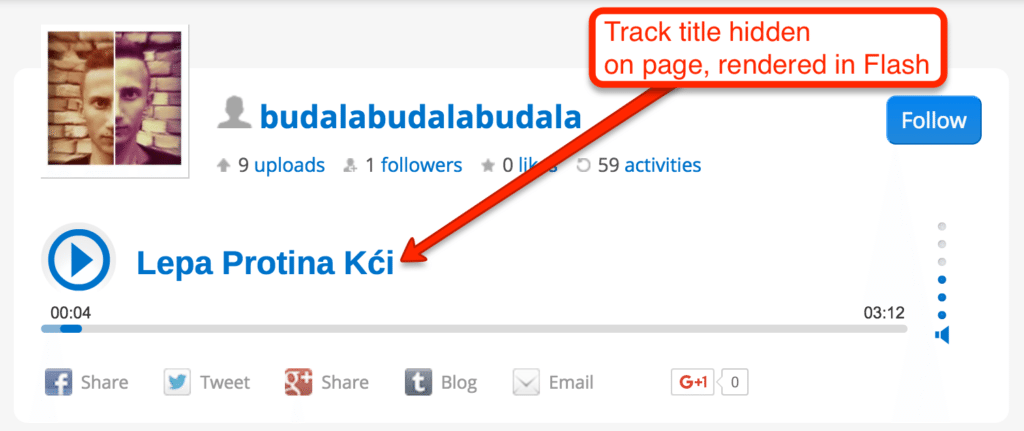

Taking a closer look at the track pages (the individual URL's that comprise more than 95% of the site's content), I realized that the track titles that were being displayed lived within the custom Flash player, not in the pages HTML.

The page not only had no H1, but the track title was being rendered in the HTML, but then hidden with a display:none tag in the CSS.

Google parses all of this HTML and this looks shady as hell.

The fix was simple; pull the track title out of the flash player, turn on the HTML version, and wrap it in an <h1> tag.

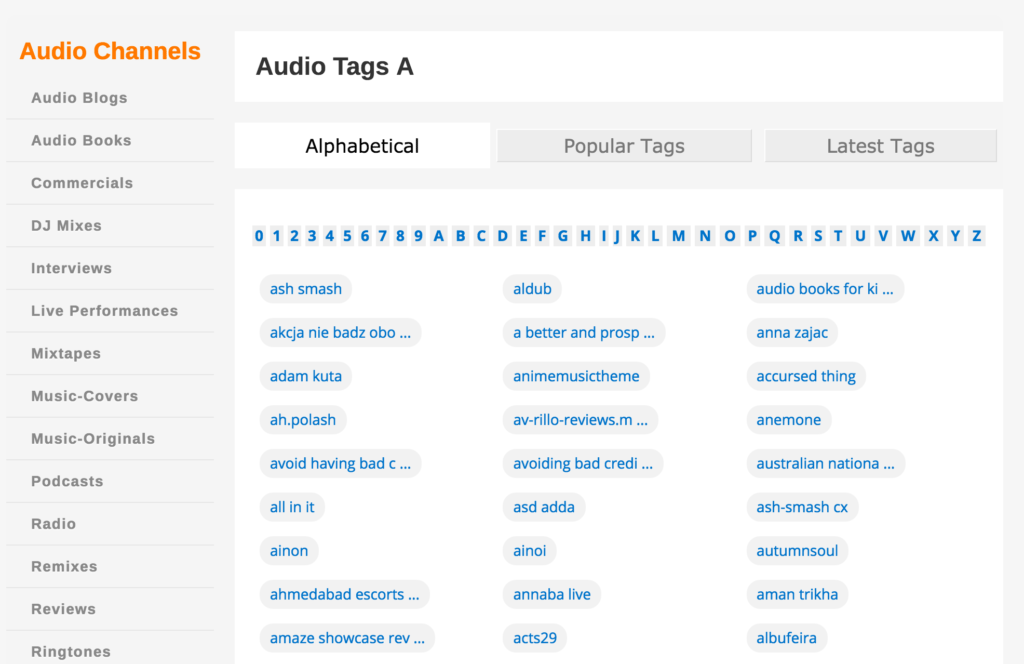

URL Architecture

Looking at the way the URL's were built, not only were they not using the most ideal naming conventions to create optimized topical relevancy (

) but they were all flat.

Flat URL's work when you have extremely horizontal content sets, like Wikipedia, but they fall short when you have big top-level content groups that all of your other content fits into.

So we organized all the content types into 2 master parent directories;

Audio, and

Music

Optimizing Crawl Budget

This is far from a new concept, however, I still constantly meet and speak to "

" who give this no consideration.

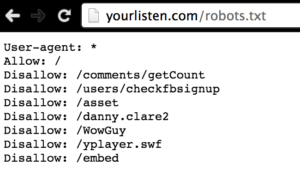

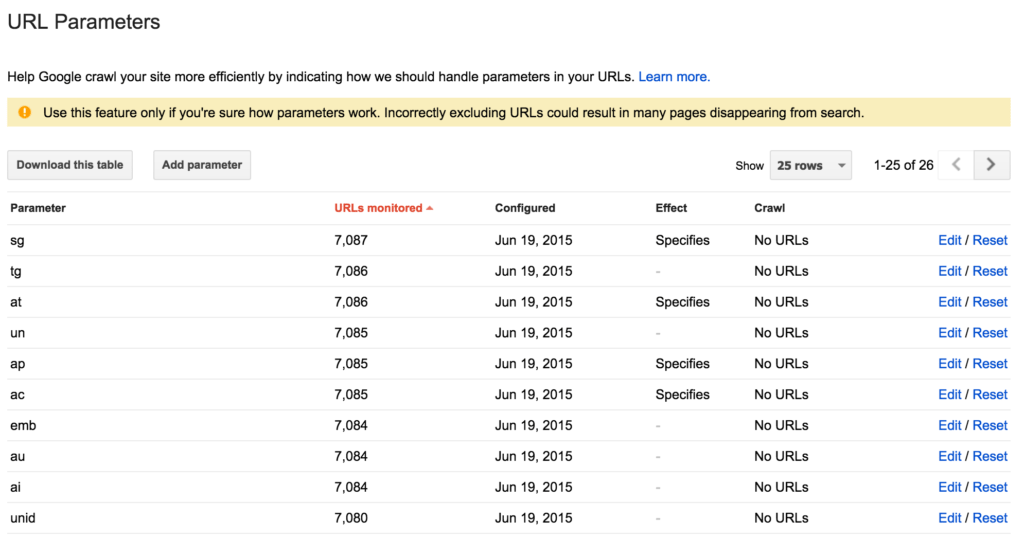

While Google doesn't exactly have limited resources, it does start to pay less attention to your site's pages and the importance it assigns them based on what it finds when it crawls your site. Using a combination of your site's robots.txt file and the URL Parameter management console within Google Search Console,

. What I'm talking about is filtering out URL parameters that are not

.

For YourListen this meant taking the URL's that hosted the embed-able player, the source .swf files, a handful of code assets and moving these to their own directories so they could be blocked from being crawled, like so:

and then going into the URL Parameter manager in WMT and blocking all the parameters that don't merit having every variation crawled;

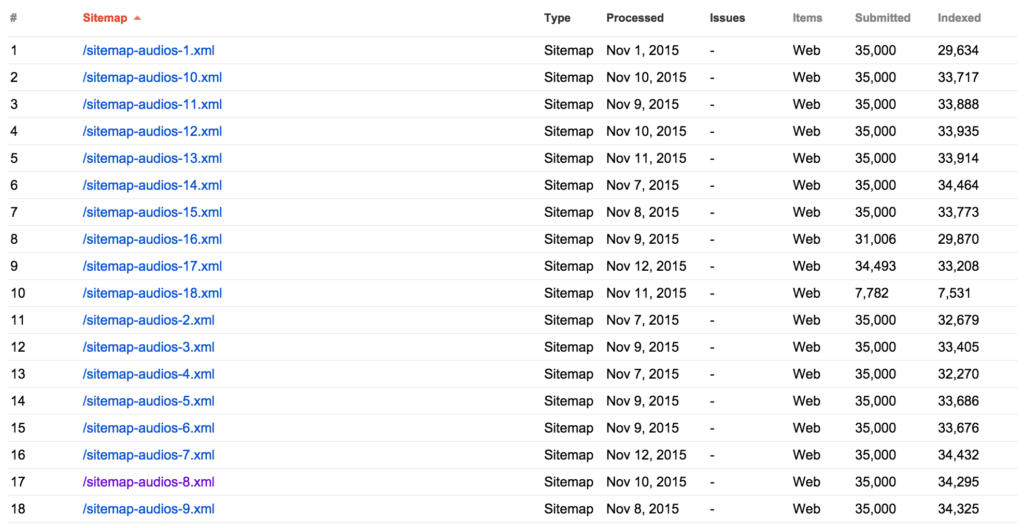

Building Optimized Sitemaps

For all the veteran SEO's reading this you already know how important these files are, but let me share some learning with you.

I've worked on large sites, but I can honestly say that YourListen was probably the largest - with millions of individual content pages.

In case you didn't know, Google limits it's individual sitemap crawls to 10MB and 50,000 URL's.

But, based on my first hand experience, we found that limiting individual XML sitemaps to 35,000 URL's yielded a far more effective crawl.

Furthermore, we found that not only nesting sitemap files into indexes improved the efficacy of the crawl rate but that actually linking (like with <a> tags) helped us maximize the number of URL's that were parsed during the crawls.

Finally, I found that when I uploaded the sitemaps it helped to not only test them for errors (confirming to Google that they're properly formatted and clear of errors) but then also re-submitting them everyday for a few days; essentially brute-forcing the re-crawl.

Unfortunately you can't set a date range in Search Console so I can't show how big of a difference the above changes made to the index rate, but I can show the effect this has had for at least the past 10 months:

Key Takeaways

The biggest learning I hope you take away from this post is just how incredibly important it is to be meticulous when it comes to the cleanliness of your site's mark-up and structure.

Leverage your site's internal link equity and your strongest page's citation flow. Use this to your full advantage to send link equity to the pages that need it most.

Optimize your site's crawl budget and make sure you're not wasting Googlebot's time crawling pages with thin or near-duplicate content.

Don't underestimate the power of properly built and optimized sitemaps, and make sure you're submitting them regularly through GSC.

Put in the work to engineer a few big traffic pops, while they may only be a flash in the pan in terms of growth; the sum of these parts will create a larger whole.